Spatio-Spectral Graph Neural Networks (S²GNN)

Abstract

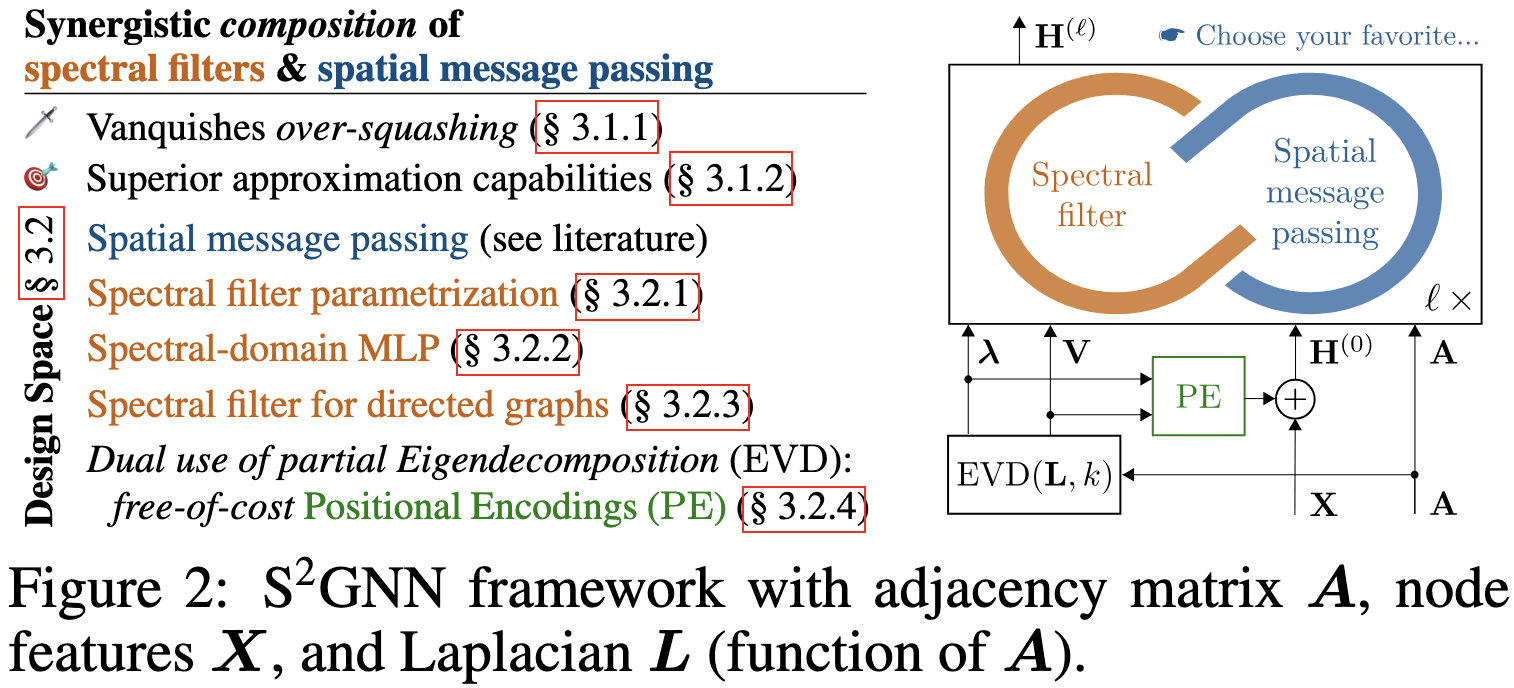

Spatial Message Passing Graph Neural Networks (MPGNNs) are widely used for learning on graph-structured data. However, key limitations of l-step MPGNNs are that their "receptive field" is typically limited to the l-hop neighborhood of a node and that information exchange between distant nodes is limited by over-squashing. Motivated by these limitations, we propose Spatio-Spectral Graph Neural Networks (S2GNNs) – a new modeling paradigm for Graph Neural Networks (GNNs) that synergistically combines spatially and spectrally parametrized graph filters. Parameterizing filters partially in the frequency domain enables global yet efficient information propagation. We show that S2GNNs vanquish over-squashing and yield strictly tighter approximation-theoretic error bounds than MPGNNs. Further, rethinking graph convolutions at a fundamental level unlocks new design spaces. For example, S2GNNs allow for free positional encodings that make them strictly more expressive than the 1-Weisfeiler-Lehman (WL) test. Moreover, to obtain general-purpose S2GNNs, we propose spectrally parametrized filters for directed graphs. S2GNNs outperform spatial MPGNNs, graph transformers, and graph rewirings, e.g., on the peptide long-range benchmark tasks, and are competitive with state-of-the-art sequence modeling. On a 40 GB GPU, S2GNNs scale to millions of nodes.

Links

Cite

Please cite our paper if you use the method in your own work:

@inproceedings{geisler2024_spatio-spectral_graph_neural_networks,

title = {Spatio-Spectral Graph Neural Networks},,

author = {Geisler, Simon and Kosmala, Arthur and Herbst, Daniel and G\"unnemann, Stephan},

booktitle={Thirty-eighth Conference on Neural Information Processing Systems (NeurIPS)}year = {2024},}