Efficient Robustness Certificates for Discrete Data: Sparsity-Aware Randomized Smoothing for Graphs, Images and More

This page links to additional material for our paper

Efficient Robustness Certificates for Discrete Data:

Sparsity-Aware Randomized Smoothing for Graphs, Images and More

by Aleksandar Bojchevski, Johannes Gasteiger and Stephan Günnemann

Published at the International Conference on Machine Learning (ICML) 2020

Links

[Paper | Supplementary material | Presentation (ICML) | GitHub]

Abstract

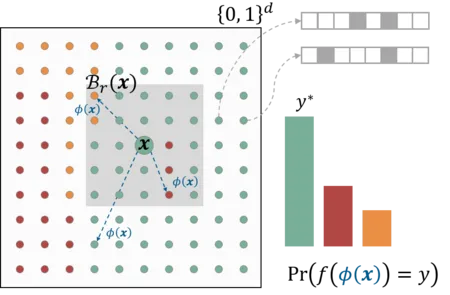

Existing techniques for certifying the robustness of models for discrete data either work only for a small class of models or are general at the expense of efficiency or tightness. Moreover, they do not account for sparsity in the input which, as our findings show, is often essential for obtaining non-trivial guarantees. We propose a model-agnostic certificate based on the randomized smoothing framework which subsumes earlier work and is tight, efficient, and sparsity-aware. Its computational complexity does not depend on the number of discrete categories or the dimension of the input (e.g. the graph size), making it highly scalable. We show the effectiveness of our approach on a wide variety of models, datasets, and tasks -- specifically highlighting its use for Graph Neural Networks. So far, obtaining provable guarantees for GNNs has been difficult due to the discrete and non-i.i.d. nature of graph data. Our method can certify any GNN and handles perturbations to both the graph structure and the node attributes.

Cite

Please cite our paper if you use the method in your own work:

@inproceedings{bojchevski_sparsesmoothing_2020,

title = {Efficient Robustness Certificates for Discrete Data: Sparsity-Aware Randomized Smoothing for Graphs, Images and More},

author = {Bojchevski, Aleksandar and Gasteiger, Johannes and G{\"u}nnemann, Stephan},

booktitle={Proceedings of Machine Learning and Systems 2020},

pages = {11647--11657},

year = {2020}

}