Prof. Dr. Rüdiger Westermann

Rüdiger Westermann is a Professor for Computer Science at the Technical University of Munich. He is head of the chair for Computer Graphics and Visualization and one of the main drivers of the Visual Computing and Computer Games initiatives at TUM.

Prof. Dr. Rüdiger Westermann

Technical University of Munich

Informatics 15 - Chair of Computer Graphics and Visualization (Prof. Westermann)

Postal address

Boltzmannstr. 3

85748 Garching b. München

Place of employment

Informatics 15 - Chair of Computer Graphics and Visualization (Prof. Westermann)

Boltzmannstr. 3(5613)/II

85748 Garching b. München

- Phone: +49 (89) 289 - 19456

- Fax: 289 19462

- Room: 5613.02.054

- westermann@tum.de

Research

In the following, I highlight some of our research in the field of scientific visualization, data science, and interactive computer graphics. For a complete list of our research please check out our publication page.

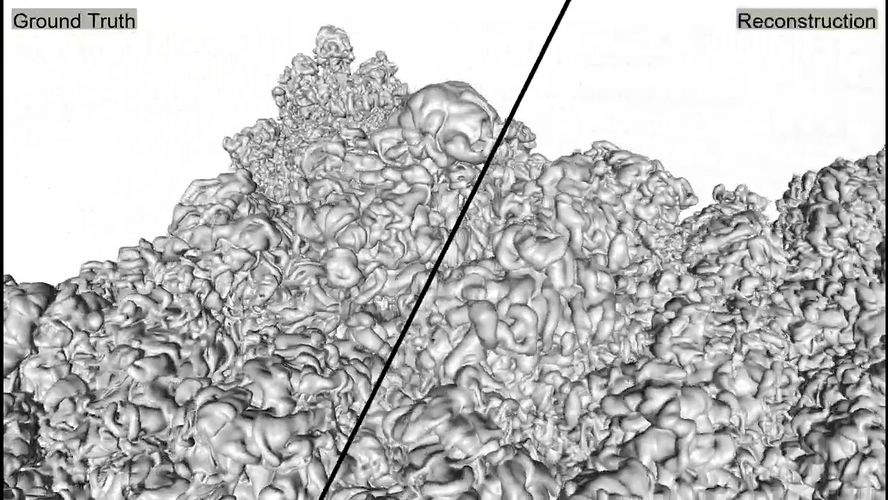

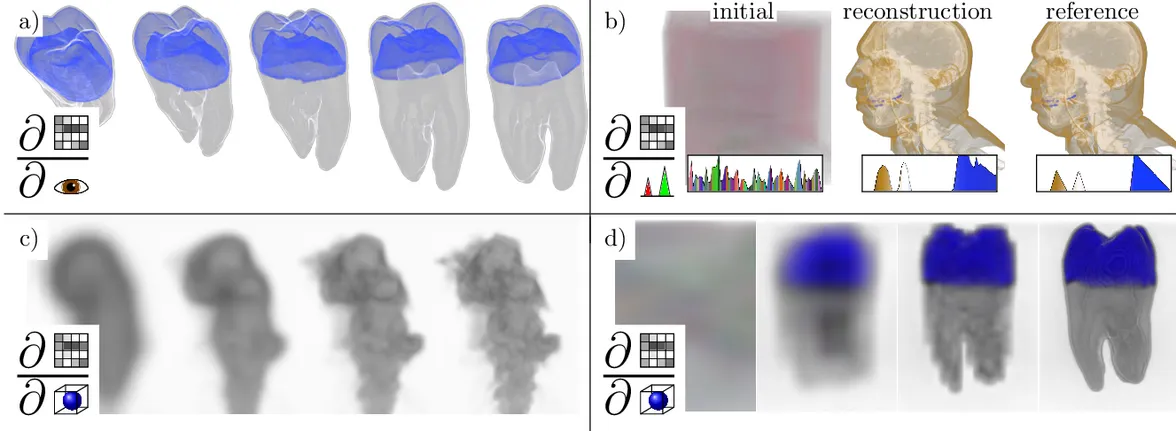

Reconstructing Volumetric Fields from Single Views

Given a single view of a volume, our method DPS (Diffusion Posterior Sampling) can reconstruct a 3D field from its latent representation that matches the view under the same lighting conditions. A differentiable path tracer is used to optimize physical scene parameters using Stochastic Gradient Descent in parameter space, while simultaneously performing posterior sampling conditioned on the observation, in the latent space of a trained diffusion model.

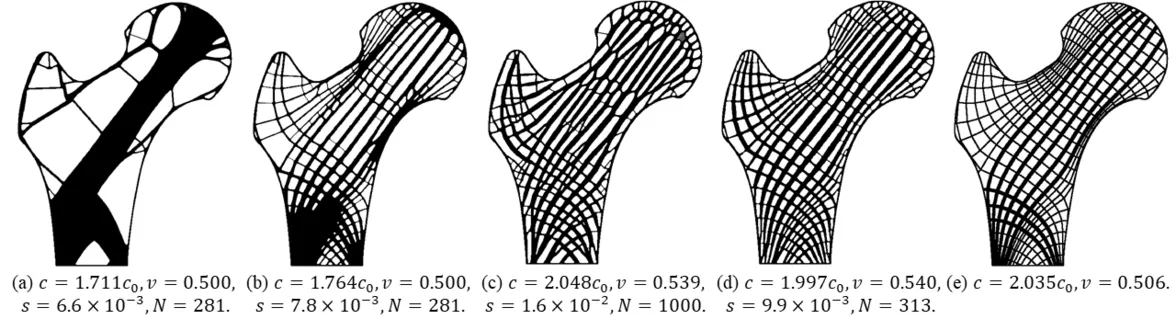

SGLDBench: A Benchmark Suite for Stress-Guided Lightweight 3D Designs

Finally it's here: A Stress-Guided Lightweight Design Benchmark (SGLDBench). SGLDBench is a comprehensive benchmark suite for applying and evaluating material layout strategies to generate stiff, lightweight designs in 3D domains. It provides a seamlessly integrated highly efficient simulation and analysis framework, including six reference strategies and a scalable multigrid elasticity solver to efficiently execute these strategies and validate the stiffness of their results. Check it out here:

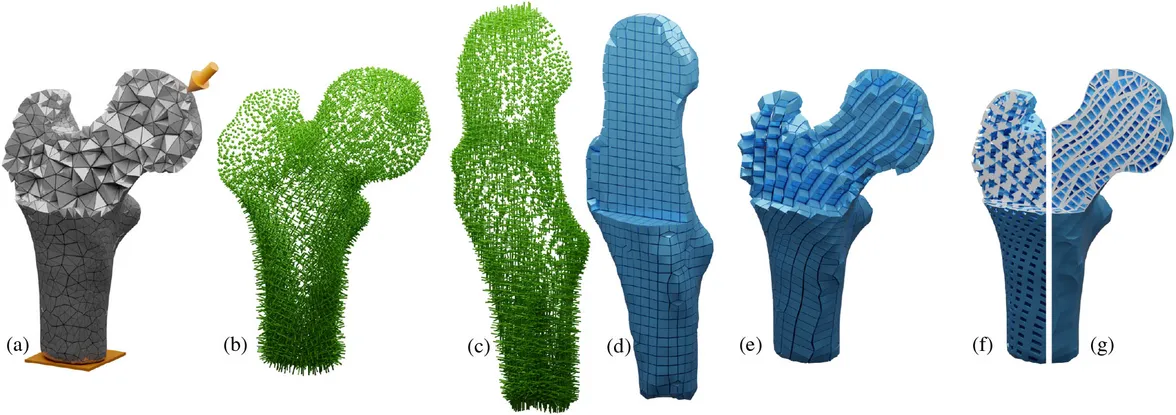

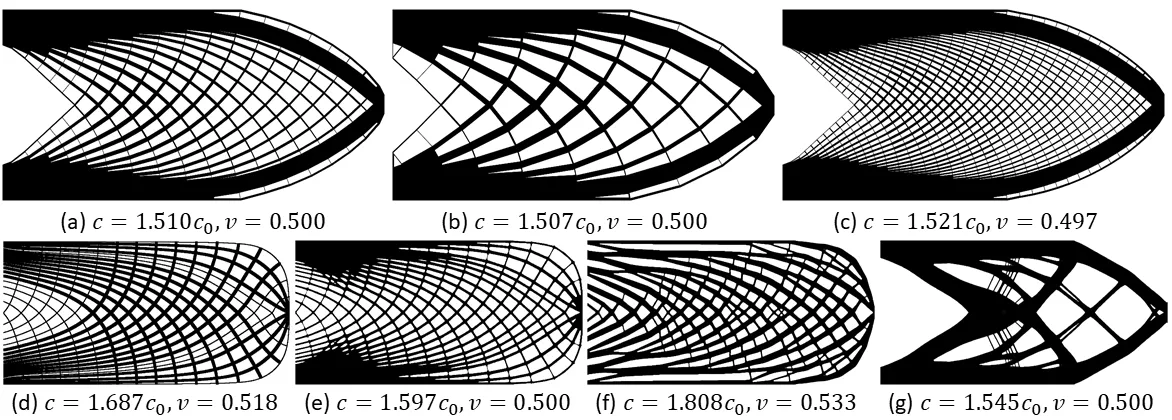

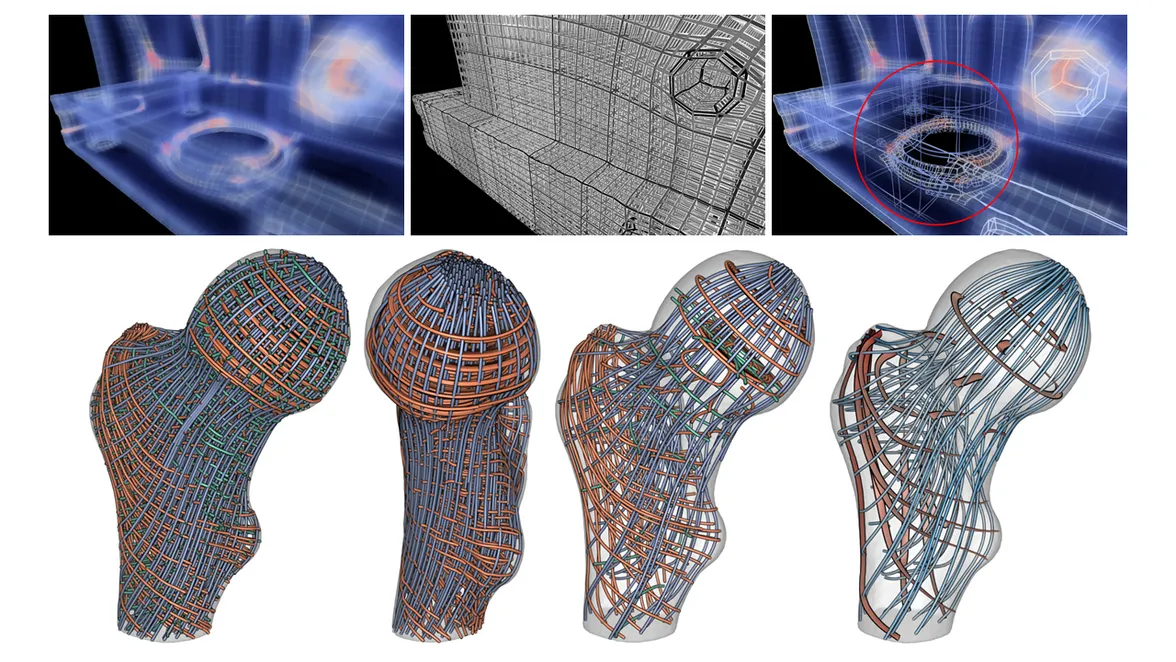

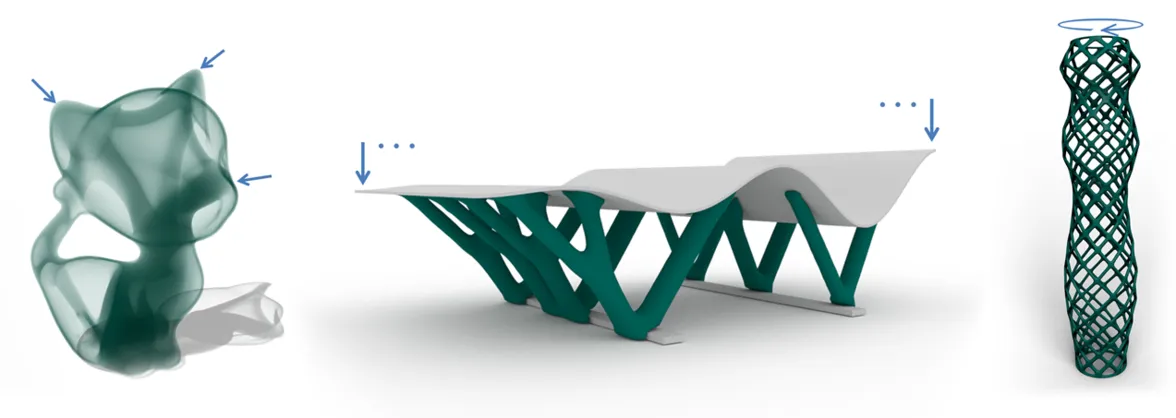

Stress-Aligned Hexahedral Lattice Structures

Maintaining the maximum stiffness of components with as little material as possible is an overarching objective in computational design and engineering. It is well-established that in stiffness-optimal designs, material is aligned with orthogonal principal stress directions. In the limit of material volume, this alignment forms micro-structures resembling quads or hexahedra. Achieving a globally consistent layout of such orthogonal micro-structures presents a significant challenge, particularly in three-dimensional settings. We propose a novel geometric algorithm for compiling stress-aligned hexahedral lattice structures. Our method involves deforming an input mesh under load to align the resulting stress field along an orthogonal basis.

Cinematic Anatomy

Interactive photorealistic rendering of 3D anatomy is used in medical education to explain the structure of the human body. It is currently restricted to frontal teaching scenarios, where even with a powerful GPU and high-speed access to a large storage device where the data set is hosted, interactive demonstrations can hardly be achieved. We present the use of novel view synthesis via compressed 3D Gaussian Splatting (3DGS) to overcome this restriction, and to even enable students to perform cinematic anatomy on lightweight and mobile devices. Our proposed pipeline first finds a set of camera poses that captures all potentially seen structures in the data. High-quality images are then generated with path tracing and converted into a compact 3DGS representation, consuming < 70 MB even for data sets of multiple GBs. This allows for real-time photorealistic novel view synthesis that recovers structures up to the voxel resolution and is almost indistinguishable from the path-traced images.

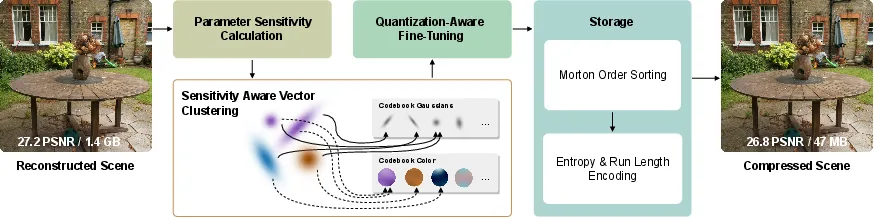

Compressed 3D Gaussian Splatting with Hardware Rasterization

We propose a compressed 3D Gaussian splat representation that utilizes sensitivity-aware vector clustering with quantization-aware training to compress directional colors and Gaussian parameters. The learned codebooks have low bitrates and achieve a compression rate of up to $31\times$ on real-world scenes with only minimal degradation of visual quality.

We demonstrate that the compressed splat representation can be efficiently rendered with hardware rasterization on lightweight GPUs at up to $4\times$ higher framerates than reported via an optimized GPU compute pipeline.

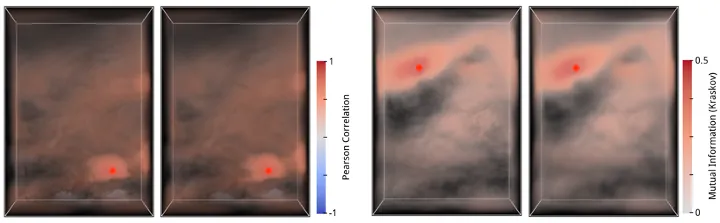

CorrNet - Correlation Neural Network

We have recently introduced Corr-Net, a neural network that has been trained to reconstruct point-to-point correlations in large 3D ensemble fields. CorrNet has learned to infer 1500 billion point-to-point Pearson correlation or mutual information estimates in a 1000-member simulation ensemble. Inference of the dependencies between data values at an arbitrary grid vertex to all other vertices in a 250 x 352 x 20 grid takes 9 ms on a high-end GPU. The network has been trained on less than 0.01 percent of all point-to-point pairs and requires only 1GB at runtime.

Neural Volume Compression and Rendering

We have addressed the question whether neural volume representation networks can effectively compress and efficiently reconstruct volumetric data sets, and whether they can even be used for temporal reconstruction tasks. We have proposed a novel design of such networks using GPU tensor cores to integrate the reconstruction seamlessly into on-chip raytracing kernels. Comparisons to alternative implementations and compression schemes show significantly improved performance at compressions rates that are on par with those of the most effective competitors. For time-varying fields, we have proposed a solution that builds upon latent-space interpolation to enable random access reconstruction at arbitrary granularity and high reconstruction fidelity.

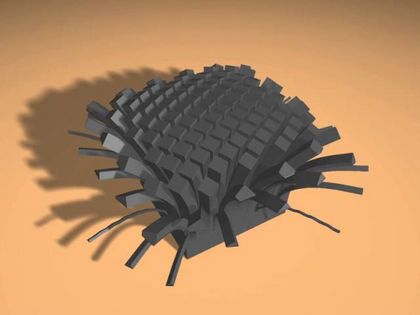

Topology Optimization for Structural Design

We have built upon our work to radically change the way so-called de-homogenization is performed, to efficiently design high-resolution load-bearing structures. Our new approach builds upon streamline-based parametrization of the design domain, but now uses direction fields that are obtained from homogenization-based topology optimization, i.e., where an optimal distribution of continuous material values is performed instead of generating a binary design. Streamlines in these fields are then converted into a quad-dominant mesh, and the widths of the grid edges are adjusted according to the density and anisotropy of the optimized cells. In a number of numerical examples, we have demonstrated the mechanical performance and regular appearance of the resulting structural designs.

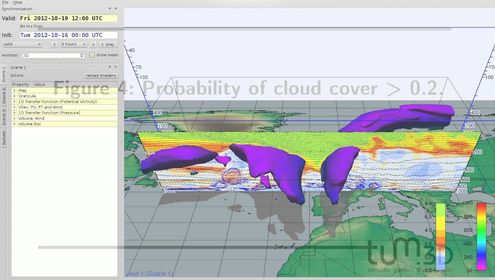

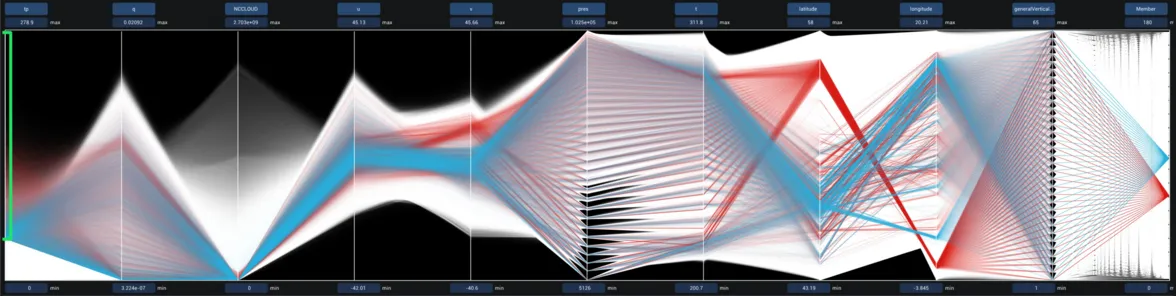

Scalable Multi-Parameter Visualization

Within the Collaborative Research Center "Waves to Weather", funded by the German Research Foundation, we work together with meteorologist to develop efficient visual analysis techniques for multi-parameter data, i.e., sets of data points with potentially many associated parameter values per data point. We have proposed a scalable GPU realization of parallel coordinates building upon 2D pairwise attribute bins, to significantly reduce the number of lines to be rendered and, thus, make parallel coordinates applicable to weather forecast ensembles comprising billions of data points, each carrying multiple prognostic floating-point variables like temperature, precipitation and pressure, plus spatial and simulation input variables.

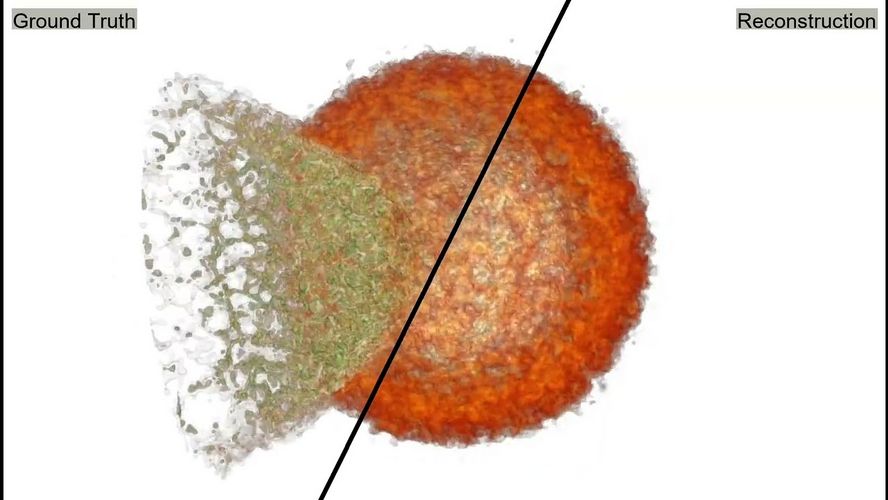

Volume Reconstruction via Differentiable Volume Rendering

Can a volumetric field or a color transfer function used to visualize this field be reconstructed from few images of the field. To achieve this, we have presented a differentiable volume rendering solution that provides differentiability of all continuous parameters of the volume rendering process. This differentiable renderer is used to steer the parameters towards a setting with an optimal solution of a problem-specific objective function. We have tailored the approach to volume rendering by enforcing a constant memory footprint via analytic inversion of the blending functions. This makes it independent of the number of sampling steps through the volume and facilitates the consideration of small-scale changes.

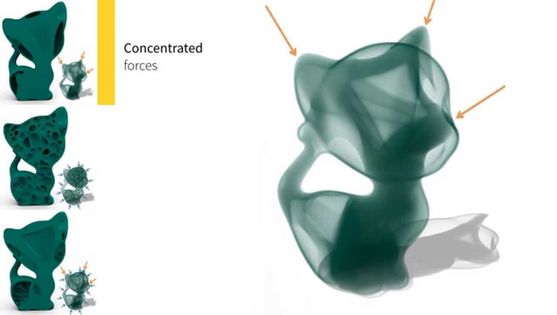

Stressline guided Topology Optimization

We have introduced a new topology optimization approach for structural design that is guided by stresslines in a solid under load. This is particularly appealing from a computational perspective, since it avoids iterative finite element optimizations. By regularizing the thickness of each stressline using derived strain energy measures, stiff structural layouts are generated in a highly efficient way. We have then proposed a method that uses the resulting structures as initial density fields in density-based topology optimization, and demonstrate that by using a stressline guided density initialization in lieu of a uniform density field, convergence issues in density-based topology optimization can be significantly relaxed at comparable stiffness of the resulting structural layouts.

Mesh Visualization

We have used advanced GPU shader functionality to generate a focus+context mesh renderer that highlights the elements in a selected region and simultaneously conveys the global mesh structure and deformation field. Therefore, we have developed a new method to construct a sheet-based level-of-detail hierarchy and smoothly blend it with volumetric information. A gradual transition from edge-based focus rendering to volumetric context rendering is performed, by combining fragment shader-based edge and face rendering with per-pixel fragment lists.

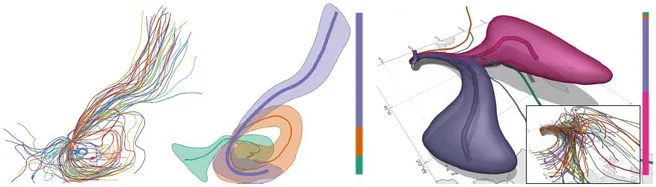

With 3D-TSV, we have introduced a visual analysis tool for the exploration of the principal stress directions in 3D solids under load. 3D-TSV provides a modular and generic implementation of key algorithms required for a trajectory-based visual analysis of principal stress directions, including the automatic seeding of space-filling stress lines, their extraction using numerical schemes, their mapping to an effective renderable representation, and rendering options to convey structures with special mechanical properties.

Learning to Sample

Can neural networks learn were to sample an object so that with few samples an accurate image of this object can be reconstructed. We have made a first step towards answering this question by learning of correspondences between the data, the sampling patterns and the generated images. We have introduced a novel neural rendering pipeline, which is trained end-to-end to generate a sparse adaptive sampling structure from a given low-resolution input image, and reconstruct a high-resolution image from the sparse set of samples. For the first time, to the best of our knowledge, we demonstrate that the selection of structures that are relevant for the final visual representation can be jointly learned together with the reconstruction of this representation from these structures.

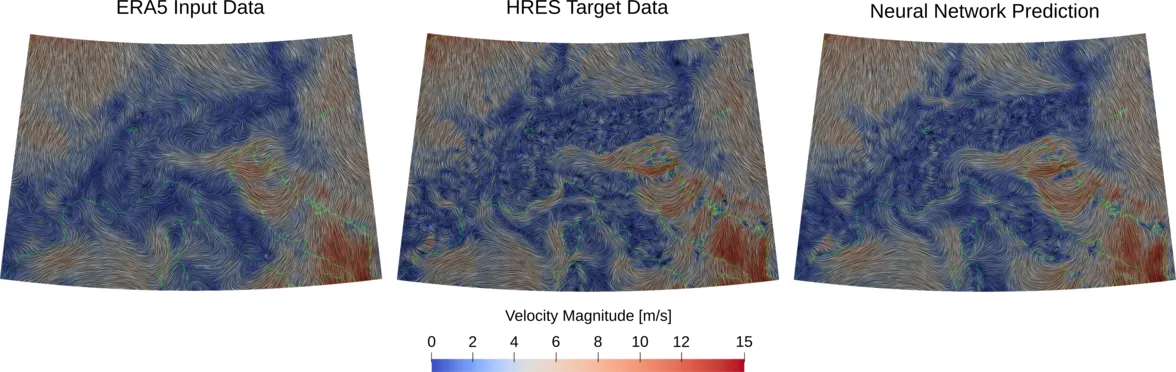

Deep Weather

Within the Collaborative Research Center "Waves to Weather", funded by the German Research Foundation, we continue our research at the interface between meteorology and computer science. In the second funding period, one key focus is on Deep Weather, i.e., the use of artificial neural networks for data inference related to weather forecasting. First results showing the potential of neural networks for down-scaling, i.e., inferring high-resolution wind fields from given low-resolution wind fields and their relation to high-resolution topography and boundary conditions, were presented at the ECMWF Copernicus meeting 2019.

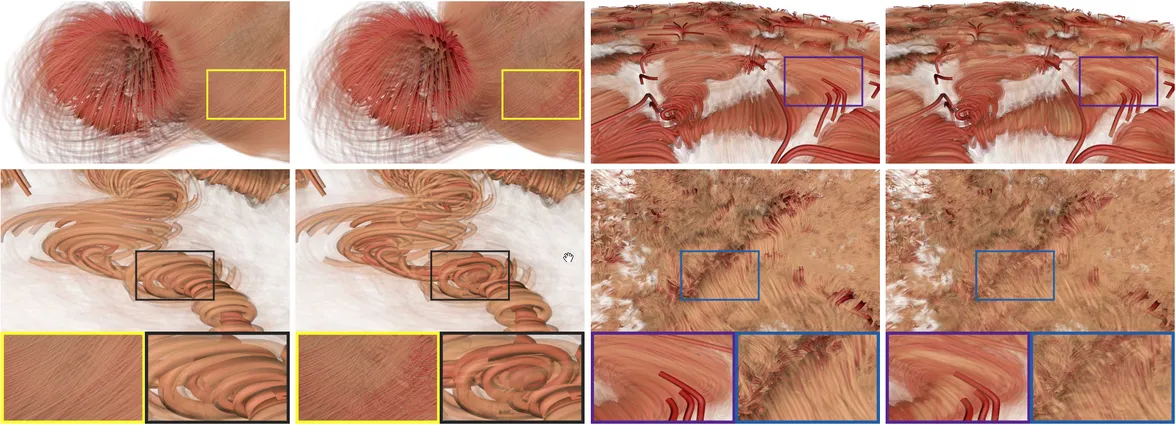

Deep Volume Rendering

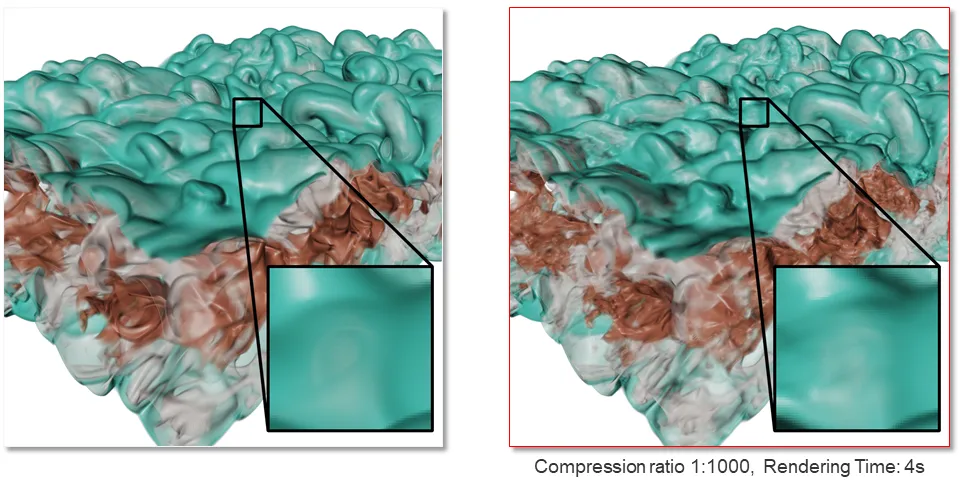

Over the last two decades, we have shaped the landscape of volume rendering, by our developments of GPU-based techniques, effective acceleration structures, and efficient compression schemes. Now its time for another innovation in this field: The use of Deep Learning to reduce the number of samples that are needed to render a volumetric data set.

Our results demonstrate that artificial neural networks can learn to infer the geometric properties of isosurfaces in volumetric scalar fields, rather than learning a specific isosurface (images below show network-based inference - right - from low resolution images at 1/16 of the samples -left. This enables to significantly reduce the number of volumetric samples that need to be taken to generate an image of an isosurface. Our research now aims at extending this work towards direct volume rendering, and using neural networks to guide renderers towards specific data features.

Rendering Transparency

In an elaborate evaluation, we have investigated CPU and GPU rendering techniques for large transparent 3D line sets. We compare accurate and approximate techniques using a number of benchmark data sets. Besides optimized implementations of rasterization-based object- and image-order methods, we consider Intel’s OSPRay CPU ray-tracing framework, a GPU ray-tracer using NVIDIA’s RTX ray-tracing interface through the Vulkan API, and voxel-based GPU line ray-tracing.

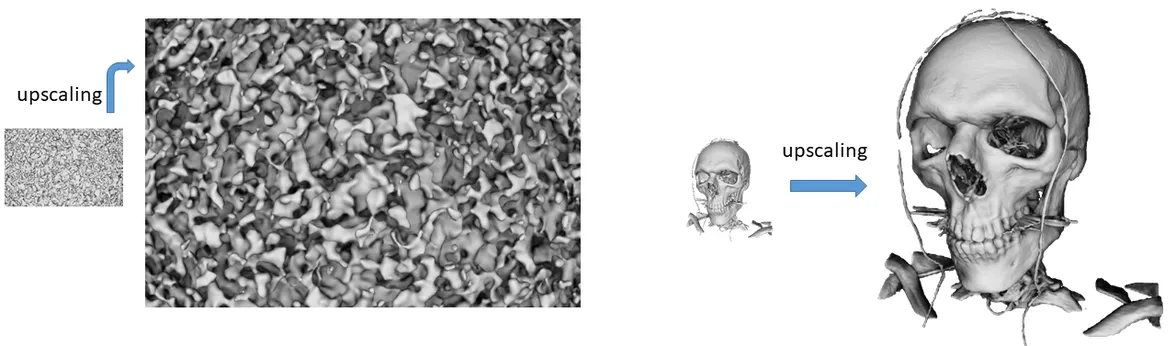

Computational Fabrication & 3D Printing

Recently, with colleagues from TUD and DTU we have pursued research on topology optimization for 3D fabrication and printing. We work on the development of scalable system designs for topology optimization, which allows to efficiently evolve the topology of high-resolution solids towards printable and light-weight-high-resistance structures. We also investigate new methods to simulate porous structures such as trabecular bone, as they are widely seen in nature. These structures are lightweight and exhibit strong mechanical properties, and they might be well suited for implant design.

Uncertainty and Ensemble Visualization

In the scope of my ERC Advanced Grant “SaferVis”, we pursue research on the modelling and visualization of uncertainty in scientific data sets. I’m also a PI in the Transregional Collaborative Research Center 165 "Waves to Weather”, funded by the German research foundation. My group helps to explore the limits of predictability in weather forecasting by visualizing the uncertainty that is represented by ensemble forecasts. We recently published a new probabilistic method to visualize trends and outliers in ensembles of particle trajectories, and we released the opensource visualization system Met.3D for meteorological ensembles. Building upon SaferVis I have successful applied for an ERC PoC grant in 2017. Here we investigate the potential of Met.3D to improve forecasting, training and communication to the public.

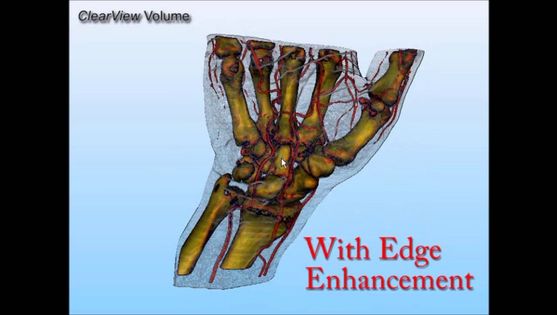

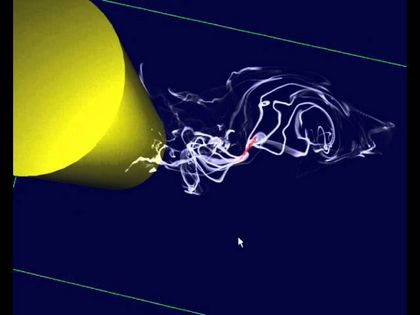

Interactive Data Visualization

We are continually striving to provide interactive visualization techniques for multi-dimensional scientific data sets as they are generated by medical imaging techniques or numerical simulations. Our research covers focus+context techniques for 3D scalar fields as well as feature-based techniques for flow fields. Recently we have introduced a new focus+context technique for flow visualization, based on a balanced flowline hierarchy and view-dependent line thinning.

Videos: Clear View, Flow Visualization

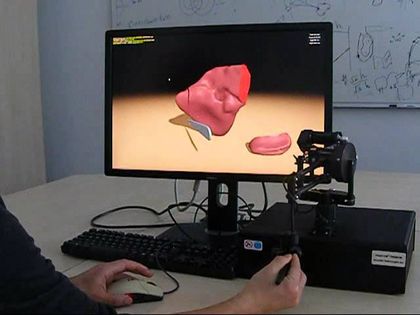

Deformable Body Simulation, Cutting and Collisions

We pursue research on realtime finite-element simulations of elastic bodies. We have considerably improved the efficiency of numerical multigrid schemes in situations with complicated boundaries, by a combination of adaptively refined hexahedral elements and cell duplication. GPU support enables the use of tens of thousands of elements in interactive environments. Our research on topology changes and collision detection for deformable bodies (including a STAR report on realtime haptic virtual cutting) has spawned a number of activities in the field. Our CGI paper on collision detection was even imitated (see this 2015 Siggraph Asia paper).

Videos: Real-Time Haptic Cutting of High-Resolution Soft Tissues, Cutting FEM, CUDA FEM

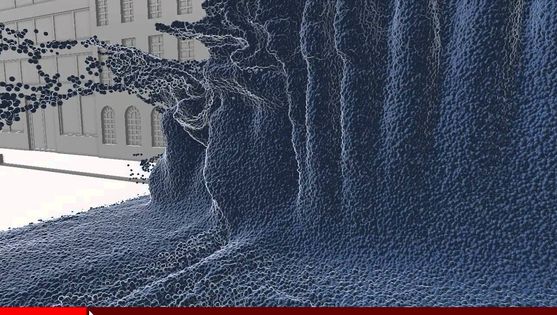

Scalable Point and Particle Rendering

In some recent research projects we have addressed the widening gap between the ability to generate data by sensors or numerical simulations, and the ability to interactively render this data. We have developed GPU data structures and rendering algorithms to enable scalable online reconstruction of depth streams from a Kinect sensor as well as interactive visual inspection of scanned point sets and simulated particle sets comprising hundreds of millions of elements.

Videos: Hybrid Rendering, Interactive Rendering of Giga-Particle Fluid Simulations

GPU-Based Compression for Large-Scale Visualization

The performance of visualization techniques is increasingly limited by memory bandwidth, when reading data from disk to main memory and from main to GPU memory. To overcome this limitation, we have developed compression schemes for terrain fields and volumetric scalar and vector fields, which allow for an on-the-fly decoding of the data on the GPU and keep memory transfer during rendering at a minimum. Based on these schemes we have developed some of the most throughput-efficient visualization systems for large-scale terrain fields as well as volumetric turbulence data and astrophysical particle simulations.

Videos: Scalable Terrain Rendering, Seq3, Millennium Vis

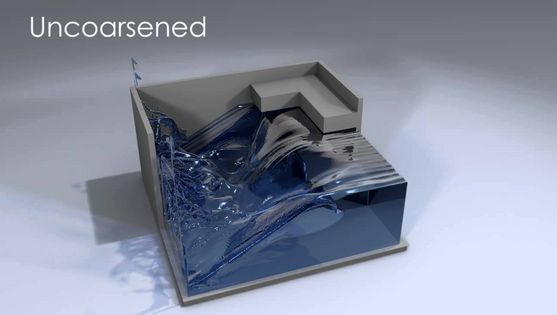

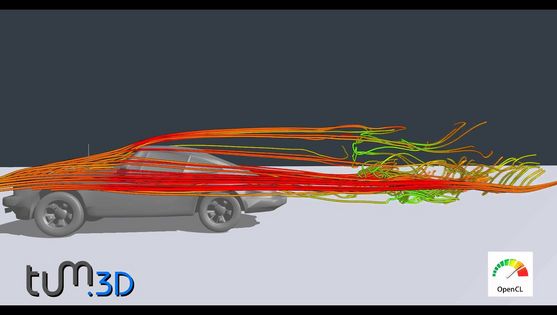

Multigrid Solvers for Scalable Physics-based Simulation and Computational Steering

High performance computing systems are increasingly used to perform computationally and memory-bandwidth intensive numerical simulations in interactive environments, to let users guide a simulation towards some region of interest or monitor the effects that are caused by interactive input parameter modifications. By using such mechanisms, the “traditional” way of modelling and simulation on HPC resources can be changed. We have demonstrated some of the most efficient computational steering environments for interactive fluid simulation and topology optimization. We have developed novel numerical multigrid schemes which can exploit their potential even in the most complicated simulation scenario, and which can simulate at extreme effective resolutions due to the use of hierarchical model representations.

Videos: Large-Scale Liquid Simulation on Adaptive Hexahedral Grids, GPU Fluid, High-Resolution Topology Optimization

Biography

Rüdiger Westermann, born in Mai 1966, is a Professor for Computer Science at the Technical University Munich. He is head of the Chair for Computer Graphics and Visualization.

He received his Diploma in Computer Science from the Techncal University Darmstadt in 1991 and his Doctoral degree "with highest honours" from the University of Dortmund in 1996. From 1992 to 1997 he was a member of the research staff at the German National Institute for Mathematics and Computer Science in St. Augustin, Bonn, where he worked together with Wolfgang Krüger on parallel graphics algorithms. In 1998, he joined the Computer Graphics Group at the University of Erlangen-Nuremberg as a research scientist. Before he became an Assistant Professor in the Visualization Group at the University of Stuttgart in 1999 he was a Research Assistant in the Mulitres Group at Caltech and a Visiting Professor with the Scientific Computing Laboratory at the University of Utah. In 2001 he was appointed by the RWTH-Aachen as an Associate Professor for Scientific Visualization in the Department of Computer Science. Since 2003, Rüdiger Westermann is Chair of the Computer Graphics and Visualization group. In 2012, he was honored an ERC Advanced Grant worth 2.3 million Euros for research in the area of uncertainty visualization.

Teaching Activities

- Computer Graphics

- Game Engine Design

- Data Visualization

- Geometric Modelling and Character Animation

- Geometric Modelling and Visualization

- Image Synthesis

- Simulation and Animation

- Discrete Structures

- Introduction to Computer Science

- Introduction to Programming

- Game Programming