Unified Mechanism-Specific Amplification by Subsampling and Group Privacy Amplification

This page links to additional material for our paper

Unified Mechanism-Specific Amplification by Subsampling and Group Privacy Amplification

by Jan Schuchardt, Mihail Stoian*, Arthur Kosmala*, Stephan Günnemann

Published at Neural Information Processing Systems (NeurIPS) 2024

Links

Abstract

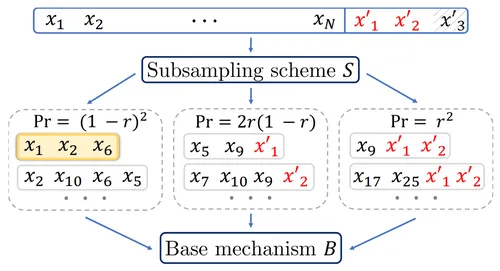

Amplification by subsampling is one of the main primitives in machine learning with differential privacy (DP): Training a model on random batches instead of complete datasets results in stronger privacy. This is traditionally formalized via mechanism-agnostic subsampling guarantees that express the privacy parameters of a subsampled mechanism as a function of the original mechanism's privacy parameters. We propose the first general framework for deriving mechanism-specific guarantees, which leverage additional information beyond these parameters to more tightly characterize the subsampled mechanism's privacy. Such guarantees are of particular importance for \emph{privacy accounting}, i.e., tracking privacy over multiple iterations. Overall, our framework based on conditional optimal transport lets us derive existing and novel guarantees for approximate DP, accounting with Rényi DP, and accounting with dominating pairs in a unified, principled manner. As an application, we analyze how subsampling affects the privacy of groups of multiple users. Our tight mechanism-specific bounds outperform tight mechanism-agnostic bounds and classic group privacy results.

Cite

Please cite our paper if you use the method in your own work:

@InProceedings{schuchardt2024_unified,Unified Mechanism-Specific Amplification by Subsampling and Group Privacy Amplification

author = {Schuchardt, Jan and Stoian, Mihail and Kosmala, Arthur and G{\"u}nnemann, Stephan},

title = {},Conference on Neural Information Processing Systems (NeurIPS)

booktitle = {},

year = {2024}

}