Diffusion Improves Graph Learning

This page is about our paper

Diffusion Improves Graph Learning

by Johannes Gasteiger, Stefan Weißenberger and Stephan Günnemann

Published at the Conference on Neural Information Processing Systems (NeurIPS) 2019

Note that the author's name has changed from Johannes Klicpera to Johannes Gasteiger.

Abstract

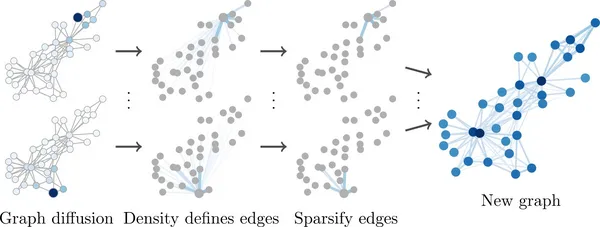

Graph convolution is the core of most Graph Neural Networks (GNNs) and usually approximated by message passing between direct (one-hop) neighbors. In this work, we remove the restriction of using only the direct neighbors by introducing a powerful, yet spatially localized graph convolution: Graph diffusion convolution (GDC). GDC leverages generalized graph diffusion, examples of which are the heat kernel and personalized PageRank. It alleviates the problem of noisy and often arbitrarily defined edges in real graphs. We show that GDC is closely related to spectral-based models and thus combines the strengths of both spatial (message passing) and spectral methods. We demonstrate that replacing message passing with graph diffusion convolution consistently leads to significant performance improvements across a wide range of models on both supervised and unsupervised tasks and a variety of datasets. Furthermore, GDC is not limited to GNNs but can trivially be combined with any graph-based model or algorithm (e.g. spectral clustering) without requiring any changes to the latter or affecting its computational complexity.

Cite

Please cite our paper if you use the model, experimental results, or our code in your own work:

@inproceedings{gasteiger_diffusion_2019,

title = {Diffusion Improves Graph Learning},

author = {Gasteiger, Johannes and Wei{\ss}enberger, Stefan and G{\"u}nnemann, Stephan},

booktitle={Conference on Neural Information Processing Systems (NeurIPS)},

year = {2019} }