A Compact and Efficient Neural Data Structure for Mutual Information Estimation in Large Timeseries

Fatemeh Farokhmanesh1, Christoph Neuhauser1, Rüdiger Westermann1

1 Chair of Computer Graphics and Visualization, Technical University of Munich, Germany

Abstract

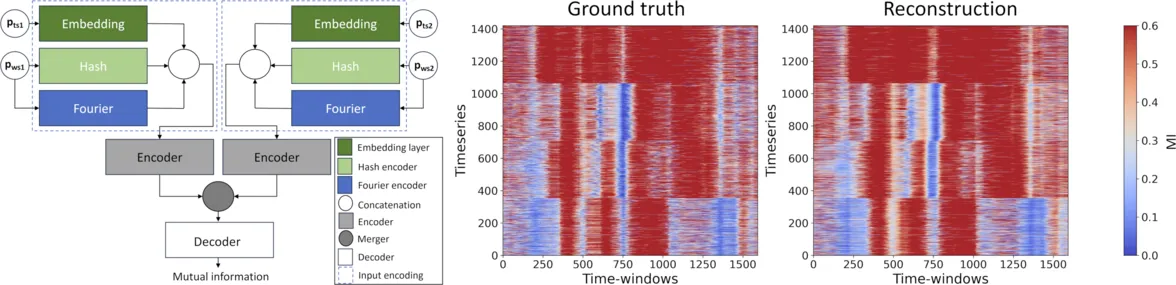

Database systems face challenges when using mutual information (MI) for analyzing non-linear relationships between large timeseries, due to computational and memory requirements. Interactive workflows are especially hindered by long response times. To address these challenges, we present timeseries neural MI fields (TNMIFs), a compact data structure that has been trained to reconstruct MI efficiently across various time-windows and window positions in large timeseries. We demonstrate learning and reconstruction with a large timeseries dataset comprising 1420 timeseries, each storing data at 1639 timesteps. While the learned data structure consumes only 45 megabytes, it answers queries for the MI estimates between the windows in a selected timeseries and the corresponding windows in all other timeseries within 44 milliseconds. Given a measure of similarity between timeseries based on windowed MI estimates, even the matrix showing all mutual timeseries similarities can be computed in less than 32 seconds. To support measuring dependence between lagged timeseries, an extended data structure learns to reconstruct MI to positively (future) and negatively (past) lagged windows. Using a maximum lag of 64 in both directions decreases query times by about a factor of 10.

Associated publications

A Compact and Efficient Neural Data Structure for Mutual Information Estimation in Large Timeseries

Fatemeh Farokhmanesh, Christoph Neuhauser, Rüdiger Westermann

36th Ineternational Conference on Scientific and Statistical Database Management (SSDBM) 2024

[PDF] [BIB]