Volumetric Isosurface Rendering with Deep Learning-Based Super-Resolution

S. Weiss1, M. Chu1, N. Thuerey1, R. Westermann1

1Computer Graphics & Visualization Group, Technische Universität München, Germany

Abstract

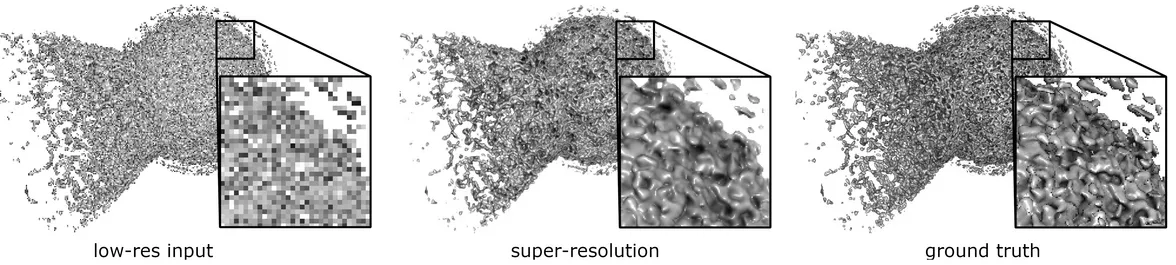

Rendering an accurate image of an isosurface in a volumetric field typically requires large numbers of data samples. Reducing the number of required samples lies at the core of research in volume rendering. With the advent of deep learning networks, a number of architectures have been proposed recently to infer missing samples in multi-dimensional fields, for applications such as image super-resolution and scan completion. In this paper, we investigate the use of such architectures for learning the upscaling of a low-resolution sampling of an isosurface to a higher resolution, with high fidelity reconstruction of spatial detail and shading. We introduce a fully convolutional neural network, to learn a latent representation generating a smooth, edge-aware normal field and ambient occlusions from a low-resolution normal and depth field. By adding a frame-to-frame motion loss into the learning stage, the upscaling can consider temporal variations and achieves improved frame-to-frame coherence. We demonstrate the quality of the network for isosurfaces which were never seen during training, and discuss remote and in-situ visualization as well as focus+context visualization as potential applications

Associated publications

Volumetric Isosurface Rendering with Deep Learning-Based Super-Resolution

S. Weiss, M. Chu, N. Thuerey, R. Westermann

TVCG 2019

[PDF] [BIB] [Preprint] [Source Code]